The

4th Chinese Congress on Artificial Intelligence (CCAI2018) which was led by

Chinese Association for Artificial Intelligence (CAAI), was held on July 28-29,

2018, in Shenzhen, China. As the largest official AI conference in China, CCAI

has been held annually to promote the advancement of artificial intelligence

globally. I attended the Congress and

summarized it for sharing AI trend to all quality professionals.

During

the congress, I met the Prof. Tie-niu Tan (谭铁牛) (Vice President of CAAI;

Academician of CAS) and we took a photo for memory.

I

also met Prof. KF Wong (黃錦輝) (Associate Dean (External Affairs), Faculty of Engineering, CUHK;

Professor, SEEM Dept., CUHK) and we are both technical committee members in

different discipline of CAAI. He appointed

in the First Batch of Natural Language Processing Experts of CAAI. And I am

Extenics Society committee members of CAAI since 2017.

Day 2 (29 July 2018):

In

the beginning of the congress, Prof. Chengqing Zong (宗成庆) (Professor of CASIA) chair the

morning reporting session. Prof. Jiawei Han (韩家炜) (Abel Bliss Professor of UIUC; ACM/IEEE Fellow) was the first speaker and his presentation topic entitled “Mining Structures from Massive Text Data: A Cross Point of Data Mining, Machine Learning and Natural Language Processing”. Prof. Han said over 80% of our data is from text/natural language/social media, unstructured/semi-structured, noisy, dynamic, but inter-related! So that Prof. Han’s research roadmaps are “Mining hidden structures from text data”, “Turning text data into multidimensional text-cubes and typed networks” and “Mining cubes and networks to generate actionable knowledge”.

Firstly, Prof. Han introduced PathPredict studies to predict future co-authors of paper in the international research journal. Then he briefed the multi-dimensional text analysis. He also mentioned that the bottleneck was mining unstructured text for structures.

And then Prof. Han explained Phrase Mining concept from raw corpus

to quality phrases and segmented corpus.

He introduced how to judge the quality of phrases as follows:

i)

Popularity (information

retrieval vs cross-language information retrieval)

ii)

Concordance

iii)

Informativeness

iv)

Completeness

After that Prof. Han explained Recognizing Typed Entities which enabling structured analysis of unstructured text corpus. However, Entity Recognition and Typing had 3 challenges and they were “Domain Restriction”, “Name ambiguity” and “Context Sparsity”. He used President Trump as example to brief the Fine-Grained Entity Typing. He also mentioned the Meta-Pattern Methodology in which separated Meta Patterns ($Country President $Politican), Entity (USA) and Attribute value (Barack Obama).

Lastly, Prof. Han stated a key problem from big data to big

knowledge was multi-dimensional mining massive test data. And he shared his

research journey to us.

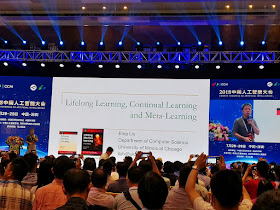

Prof. Bing Liu (刘兵) (Distinguished Professor of UIC; AAAI/ACM/IEEE Fellow) was the

second speaker and his topic named “Lifelong Learning, Continual Learning and

Meta-Learning”. Prof. Liu told us about

his drive license that even after passing the test, he continued to “learn on

the job.” Over time, he got better and better that is real intelligence (not

AlphaGo’s ability to play Go). Machine

Learning (ML) is massive data-driven optimization but humans are not great at

optimization.

Then Prof. Liu introduced the current ML paradigm for isolated

single-task learning that needs a large number of training examples. ML is suitable for well-defined tasks in

restricted and closed environment. But

humans learning never learn in isolation or from scratch. Lifelong Learning (LL) is mimics this human

learning capability. Therefore, the goat

of LL is to create a machine that learns like humans.

The Lifelong Learning (LL) model was introduced as following

diagram. The key characteristics of LL

included:

i)

Continual learning process

ii)

Knowledge accumulation in

Knowledge Base (KB) (long-term memory)

iii)

Use and adapt the past learned

knowledge to future learning, discovering new problems and learning them

incrementally

iv)

Learning on the job, learning

in model application or testing (after the initial model built)

And then Prof Liu mentioned LL supervised and unsupervised learning

included:

i)

Lifelong naïve Bayesian

classification

ii)

Lifelong Topic Modeling (LTM)

iii)

Lifelong learning in graph

label propagation

The LL extended model was showed and he also briefed Meta-learning

(also called learning to learn). At the

end, he summarized that human learning was well-known but LL had a large space

with huge challenges and it was still an open space with a lot of

opportunities.

Prof. Jiaya Jia (贾佳亚) (Professor of CUHK; IEEE Fellow) was the third speaker and his

presentation was “Advancement of Large-Scale Image Understanding and

Segementation”. Firstly, he mentioned the importance of computer vision through

daily life examples.

Then Prof. Jia demonstrated some research highlights included

Deburring, Image Generation, Make-up Go, Face Edit, etc.

And then Prof. Jia discussed Semantic Segmentation included PSPNET

(2017) and ICNet (2018); and Instance Segmentation included Multi-scale Patch

Aggregation (2016), Sequential Grouping Networks (2017) and PANet (2018). He then introduced the end-to-end training.

After that Prof. Jia demonstrated the Instance Segmentation for

auto-driving. He also introduced object modeling

included Object Proposals, Aggregation of proposals Generalized Parts, Line

Segments and Instance Boundaries. The

first two modeling were Top-down methods and the last three modeling were

Bottom-up Methods. At the end, Prof. Jia shared their excellent results on COCO

competitions.

Prof. Zhi-Hua Zhou (Professor of Nanjing University; AAAI/ACM/IEEE Fellow) was the fourth speaker and his topic entitled “A Preliminary Exploration to Deep Forest”. Firstly Prof. Zhou introduced the AI, Machine Learning (ML) and Deep Learning (DL) and he said the wave of AI because of breakthrough in DL (especially on Images & Video, Speech & Audio, and Text & Language).

Then Prof. Zhou introduced Deep Learning (DL) quoted SIAM News (Jun 2017)

that DL, a subfield of machine learning that uses “deep neural networks”, has

achieved state-of-the-art results in fields such as image and text recognition. Deep Neural Network (DNN) was mentioned and

employed Backpropagation (BP). BP is a supervised learning algorithm, for

training Multi-layer Perceptrons (MLP) (Artificial Neural Networks).

And then Prof. Zhou briefed Deep

Model. Most crucial for deep models were

“Layer-by-layer processing”, “Feature transformation” and “Sufficient model

complexity”. However, the disadvantage

of DNN included too many parameters, model usually more complex than need, big

training data, back box model and difficult for analysis.

After that Prof. Zhou introduced Deep

Forest which was not Deep Neural Network (DNN) and no need for using BP algorithm

for deep learning model. The gcForest is

just a start of Deep Forest which used “Diversity” for ensembles.

Lastly, Prof. Zhou used Convolutional

Neural Network (CNN) as example to explain new technology from starting to

mature should be accumulated for long time.

And he mentioned ZTE issues that we lacked of chip and algorithm (缺芯少魂). In order to reduce this risk,

we needed to develop our own ML chip and system platform (avoid GPU and

TensorFlow monopoly) and used Deep Forest (non-NN deep learning technology) to

replace existing DNN.

In

afternoon session, Prof. Bo Xu (徐波) (Director of Automation Institute of CAS; Dean

of artificial intelligence institute of NUST) was session chair.

Prof. Mu-Ming Poo (蒲慕明) (Director of Institute of

Neuroscience Academician of CAS, Academician of NAS) was the fifth speaker and

his presentation title named “Brain Science and Brain-like Machine Learning”. In the beginning, Prof. Poo briefed the

history of Brain Science.

Then Prof. Poo introduced the

human neural network that contained more than 10^11 neural cells and 10^14

axon.

And then Prof. Poo briefed the

three layer neural connection and they were macroscopic, mesoscopic and

microscopic images. Mice 3D axon diagram

was showed.

After that he demonstrate the zebra

fish brain activities when escape. He

explained Hebb’s Learning Rule (1949) "Cells that fire together wire

together."

Finally, Prof. Poo concluded that we could

benchmarking nature neural network for AI such as modulatory neurons, multi-directional

connection, axon’s back-propagation and lateral propagation.

Forum – How to Advance the

Development of Artificial Intelligence in China?

Ms. Quanling Zhang (张泉灵) (Founding partner of Znstartups)

was session chair.

The first question Mr. Zhang

asked for all guest about which one of three AI companies (AI chip, Autodrive

car and ML solution) I should invest? It

is difficult to answer from all guest that depended on situation.

Prof. Deyi Li (李德毅) (President of CAAI, Academician of CAE) shared his view on Autonomous Car that was a trend and implemented in China. In order to advance the development of AI in China, Prof. Li said we needed to established Smart Industry Alliance.

Then Prof. Bo Xu (徐波) (Director of Automation Institute of CAS; Dean of artificial intelligence institute of NUST) shared his view on this topic in automation research view.

Prof. Xu briefed the research

planning in CAS included neural science, new generation AI development, complex

system between human and machine, as well as AI+ industry. Lastly, he said “Stand at low tide and calm down

at high tide” (低潮時堅守, 高潮時冷靜).

Dr. Haifeng Wang (王海峰) (Senior Vice President of Baidu; ACL Fellow) then shared AI Trend and Challenge. Technology challenges on basic theory were small size of sample, low energy consumption and explainable result. The challenges on application technology were perception technique and cognition technique. The challenges on industry were software and hardware integration, Deep Learning framework and AI Chip. The challenges on application systems were multi-technique integration use and system innovation in the integrated scenes. Lastly, he said AI system needed to continual evolution.

At the end of day 2 program, all

guest took a group photo for memory.

Reference:

CCAI 2018 - http://ccai2018.caai.cn/englishPage.html

CAAI - http://www.caai.cn/

沒有留言:

發佈留言